Are not hurting its performance except perhaps on sequential reads.įor as young as bhyve is I’m happy with the performance compared to VMware, it appears to be slower on the CPU intensive tests. All that overhead of VT-d, virtual 10gbe switches for the storage network, VM storage over NFS, etc. VMware is a very fine virtualization platform that’s been well-tuned.

Guest storage is file image on ZFS dataset. Ubuntu guest is given VMware para-virtual drivers. OmniOS r151014 LTS installed under ESXi. Ubuntu guest given VMware para-virtual driversĢ – VM under ESXi 6 using NFS storage from OmniOS VM via VT-d. Storage shared with VMware via NFSv3, virtual storage network on VMXNET3. The environments were setup as follows: 1 – VM under ESXi 6 using NFS storage from FreeNAS 9.3 VM via VT-d I only tested an Ubuntu guest because it’s the only distribution I run in (in quantity anyway) addition to FreeBSD, I suppose a more thorough test should include other operating systems. The results are the average of the next 5 test runs. I ran each test 5 times on each platform to warm up the ARC. OS defaults are left as is, I didn’t try to tweak the number of NFS servers, sd.conf, etc. Guest (where tests are run): Ubuntu 14.04 LTS, 16GB, 4 cores, 1GB memory. One DC S3700 100GB over-provisioned to 8GB used as the log device. 4 x HGST Ultrastar 7K300 HGST 2TB enterprise drives in RAID-Z. IBM ServerRaid M1015 flashed to IT mode. In all environments the following parameters were used: This is running on my X10SDV-F Datacenter in a Box Build. You really only need to run bhyve when virtualizing a different OS.īhyve is still pretty young, but I thought I’d run some tests to see where it’s at… Environments In fact, FreeBSD may end up becoming the best all-in-one virtualization/storage platform. A type-2 hypervisor that supports ZFS appears to have a clear advantage (at least theoretically) over a type-1 for this type of setup. Which is all fine but it could actually be a disadvantage if you wanted some of those features (like ZFS). It seems you could take a Linux distribution running KVM and take away features until at some point it becomes a Type-1 hypervisor. Type-1 proponents say the hypervisor runs on bare metal instead of an OS… I’m not sure how VMware isn’t considered an OS except that it is a purpose-built OS and probably smaller. To protect the integrity of iSCSI headers and data, the iSCSI protocol defines error correction methods known as header digests and data digests.I’ve never understood the advantage of type-1 hypervisors (such as VMware and Xen) over Type-2 hypervisors (like KVM and bhyve). When virtual machine guest operating systems send SCSI commands to their virtual disks, the SCSI virtualization layer translates these commands to VMFS file operations.

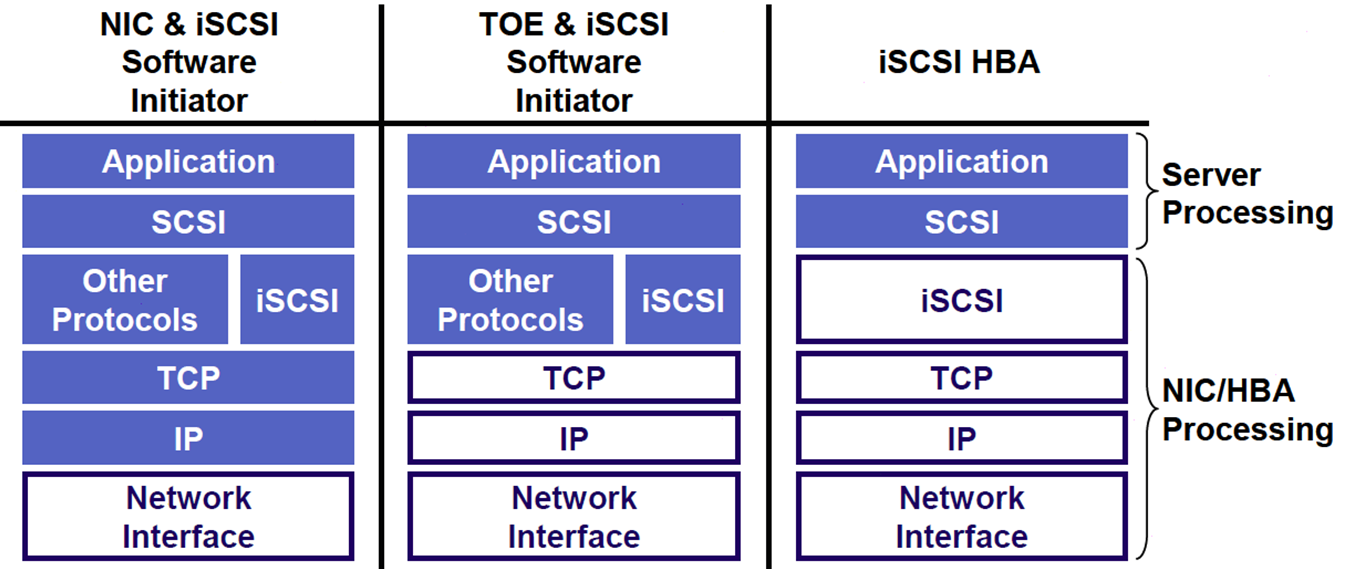

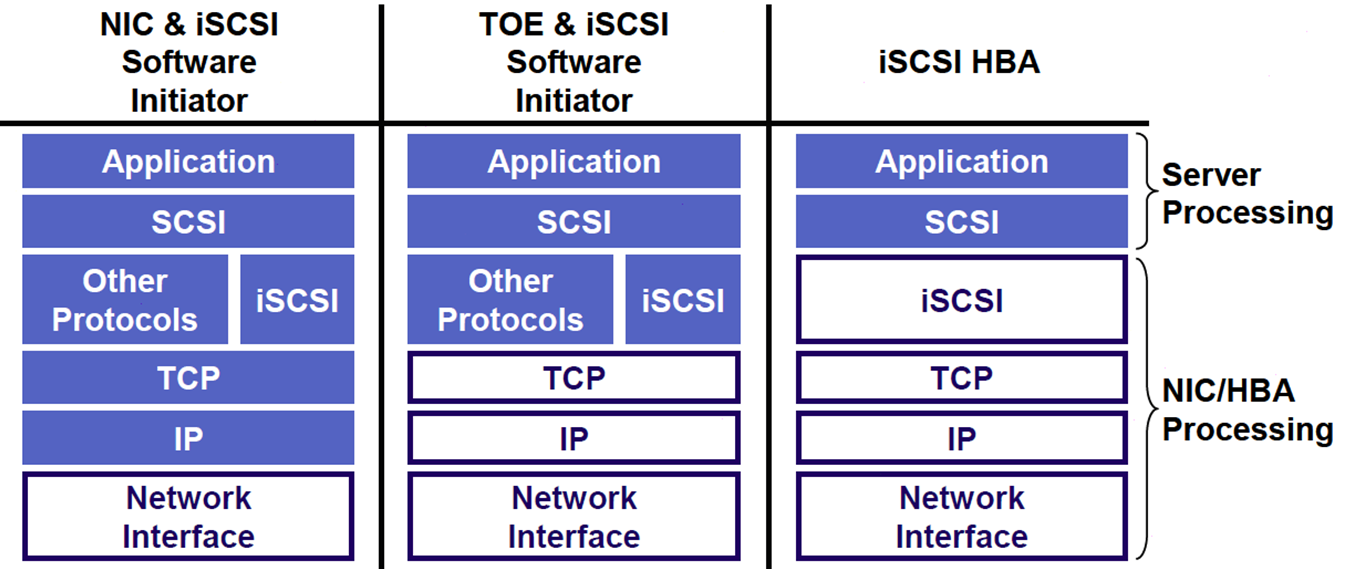

How Virtual Machines Access Data on an iSCSI SANĮSXi stores a virtual machine's disk files within a VMFS datastore that resides on a SAN storage device.You can use several mechanisms to discover your storage and to limit access to it. Discovery, Authentication, and Access Control.Typically, the terms device and LUN, in the ESXi context, mean a SCSI volume presented to your host from a storage target and available for formatting.ĮSXi supports different storage systems and arrays. The terms storage device and LUN describe a logical volume that represents storage space on a target. In the ESXi context, the term target identifies a single storage unit that your host can access. When the iSER protocol is enabled, the iSCSI framework on the ESXi host can use the Remote Direct Memory Access (RDMA) transport instead of TCP/IP. In addition to traditional iSCSI, ESXi supports the iSCSI Extensions for RDMA (iSER) protocol. To access iSCSI targets, your ESXi host uses iSCSI initiators. ISCSI uses a special unique name to identify an iSCSI node, either target or initiator. With multipathing, your ESXi host can have more than one physical path to a LUN on a storage system.Ī single discoverable entity on the iSCSI SAN, such as an initiator or a target, represents an iSCSI node. When transferring data between the host server and storage, the SAN uses a technique known as multipathing. ISCSI SANs use Ethernet connections between hosts and high-performance storage subsystems. When the iSER protocol is enabled, the host can use the same iSCSI framework, but replaces the TCP/IP transport with the Remote Direct Memory Access (RDMA) transport. In addition to traditional iSCSI, ESXi also supports iSCSI Extensions for RDMA (iSER). ESXi can connect to external SAN storage using the Internet SCSI (iSCSI) protocol.

0 kommentar(er)

0 kommentar(er)